Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

Editor's take: In the ever-evolving world of GenAI, important advances are happening across chips, software, models, networking, and systems that combine all these elements. That's what makes it so hard to keep up with the latest AI developments. The difficulty factor becomes even greater if you're a vendor building these kinds of products and working not only to keep up, but to drive those advances forward. Toss in a competitor that's virtually cornered the market – and in the process, grown into one of the world's most valuable companies – and, well, things can appear pretty challenging.

That's the situation AMD found itself in as it entered its latest Advancing AI event. But rather than letting these potential roadblocks deter them, AMD made it clear that they are inspired to expand their vision, their range of offerings, and the pace at which they are delivering new products.

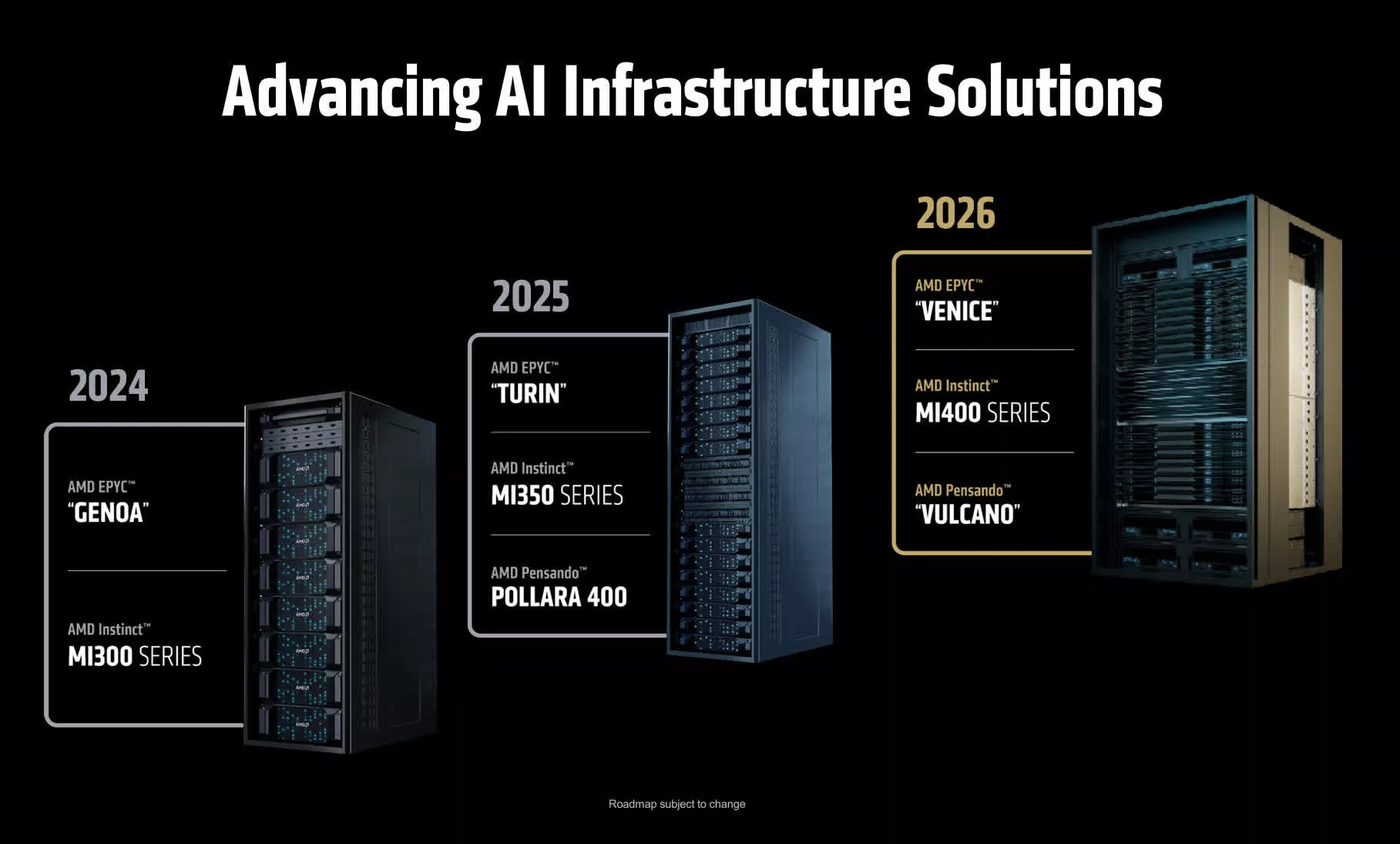

From unveiling their Instinct MI400 GPU accelerators and next-generation "Vulcan" networking chips, to version 7 of their ROCm software and the debut of a new Helios Rack architecture. AMD highlighted all the key aspects of AI infrastructure and GenAI-powered solutions. In fact, one of the first takeaways from the event was how far the company's reach now extends across all the critical parts of the AI ecosystem.

AMD Instinct roadmap

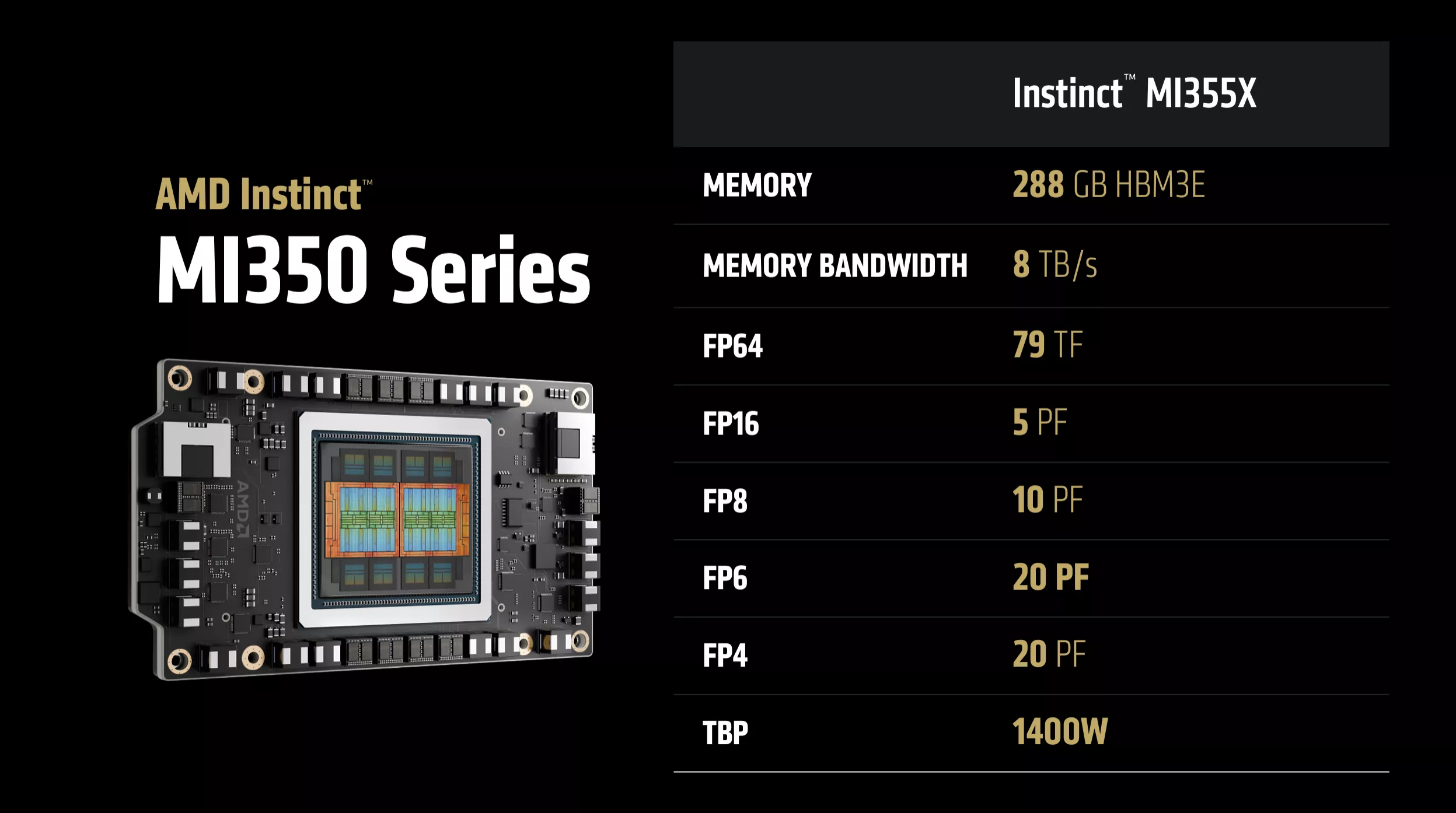

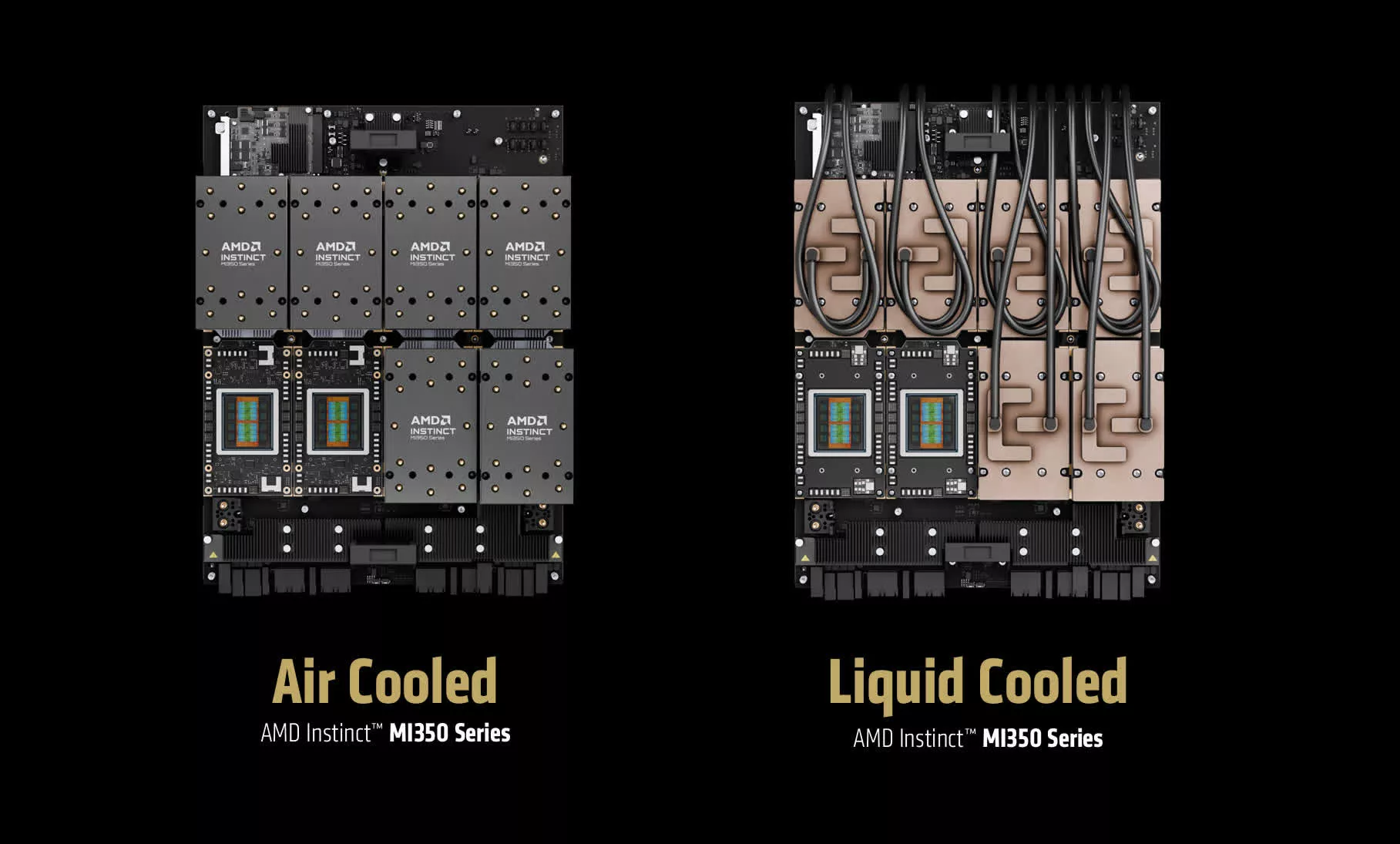

As expected, there was a great deal of focus on the official launch of the Instinct MI350 and higher-wattage, faster-performing MI355X GPU-based chips, which AMD had previously announced last year. Both are built on a 3nm process and feature up to 288 MB of HBM3E memory and can be used in both liquid-cooled and air-cooled designs.

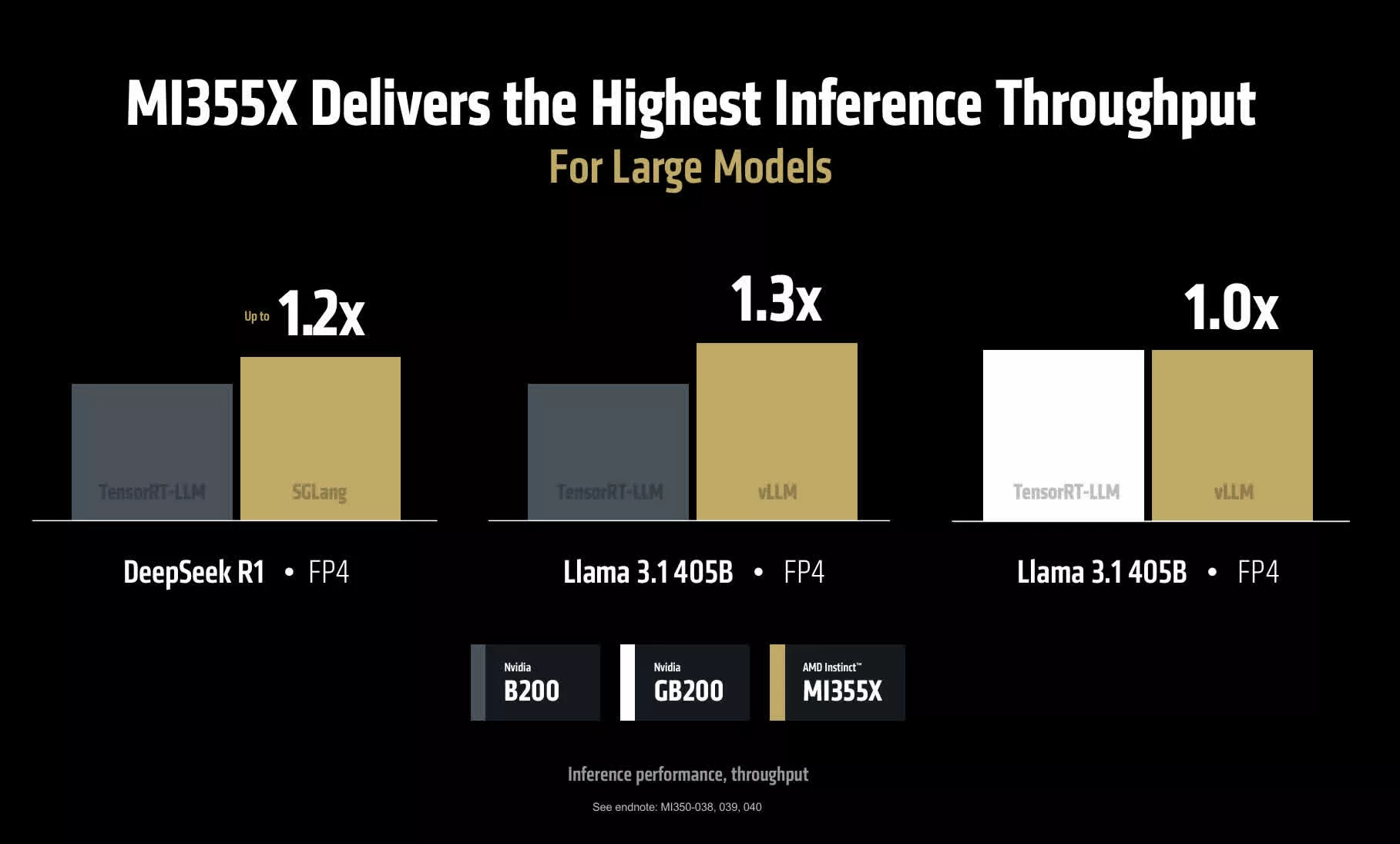

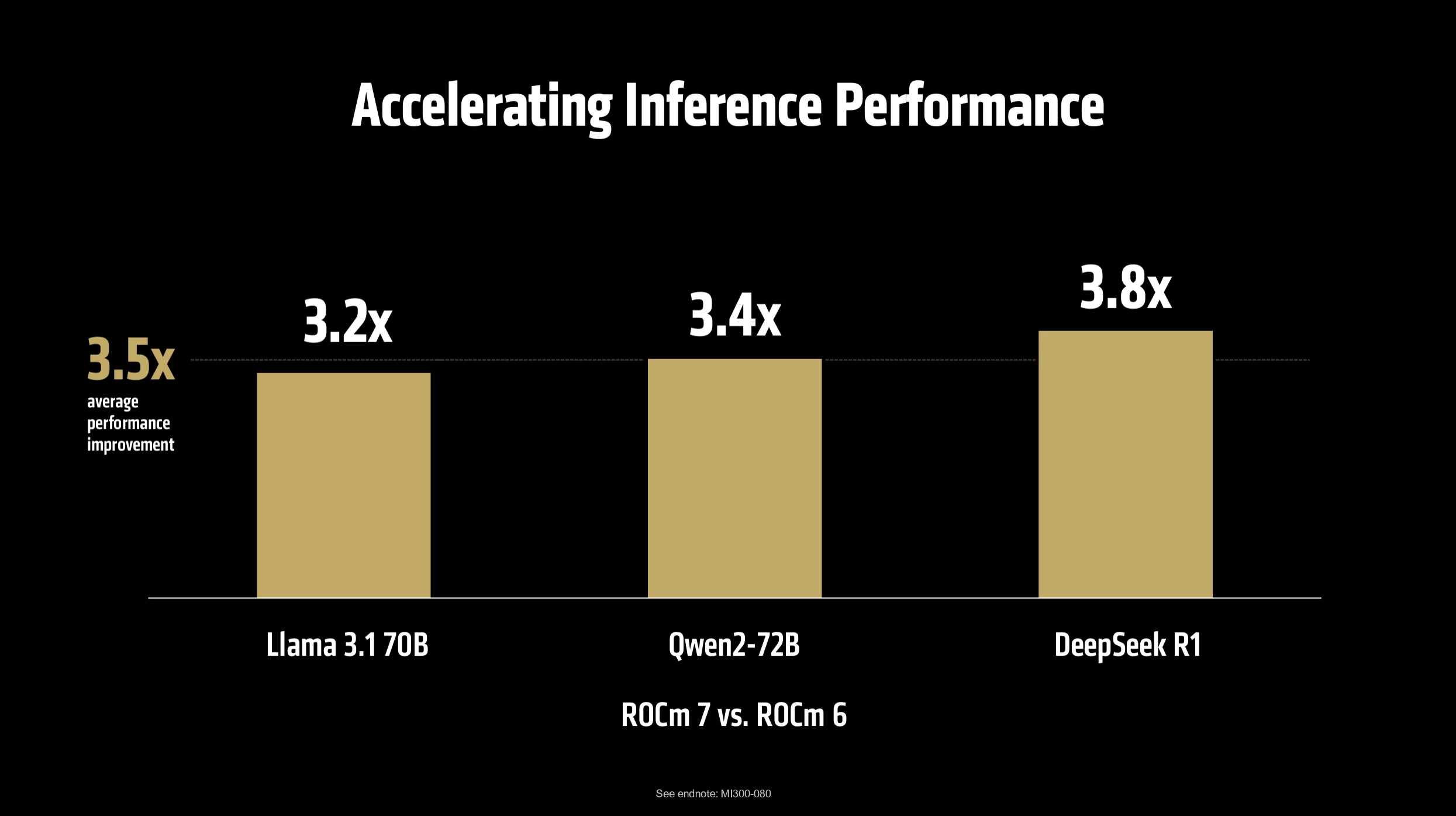

According to AMD's testing, these chips not only match Nvidia's Blackwell 200 performance levels, but even surpass them on certain benchmarks. In particular, AMD emphasized improvements in inferencing speed (over 3x faster than the previous generation), as well as cost per token (up to 40% more tokens per dollar vs. the B200, according to AMD).

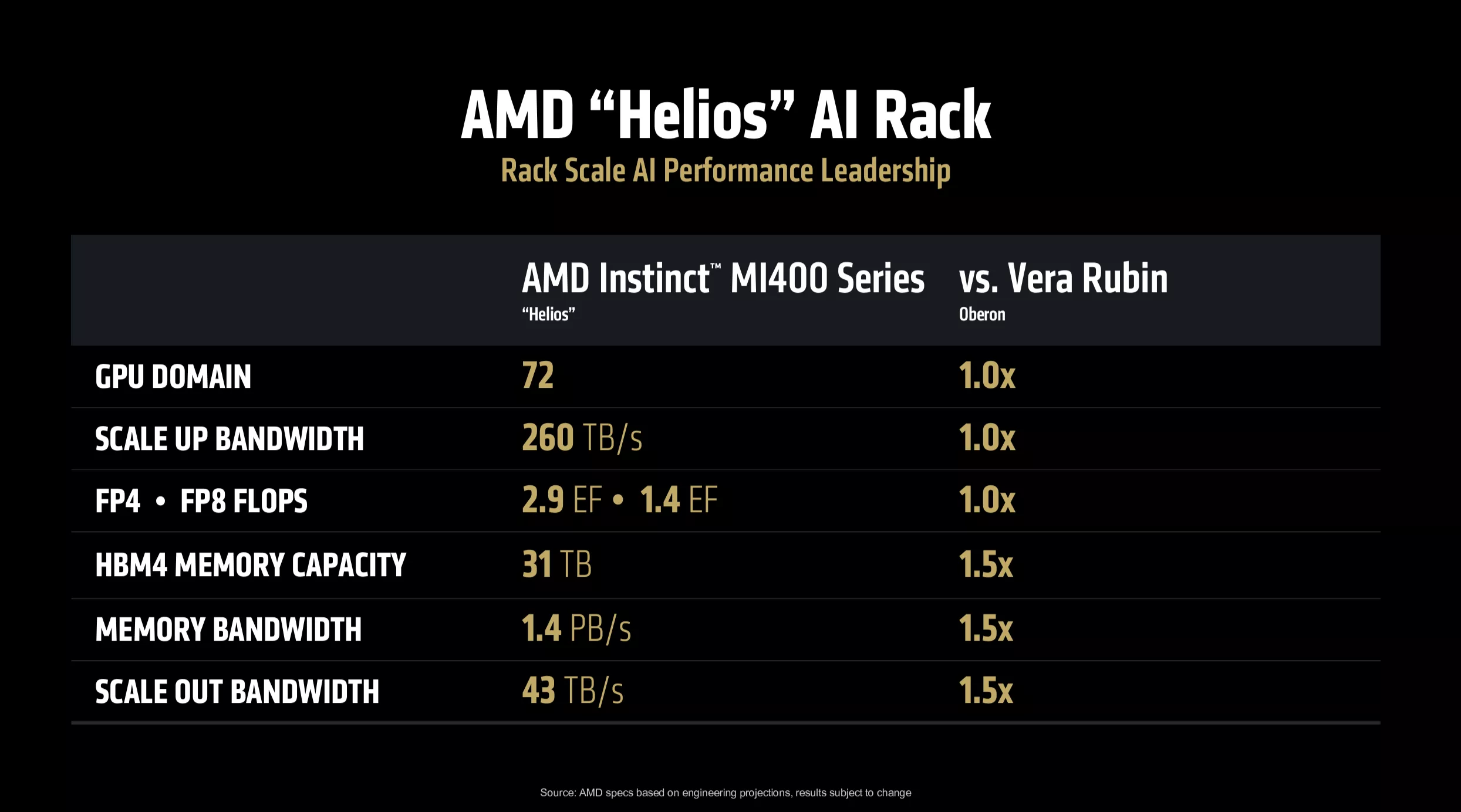

AMD also provided more details on its next-generation MI400, scheduled for release next year, and even teased the MI500 for 2027. The MI400 will offer up to 432 GB of HBM4 memory, memory bandwidth of 19.6 TB/sec, and 300 GB/sec of scale-out memory bandwidth – all of which will be important for both running larger models and assembling the kinds of large rack systems expected to be needed for next-generation LLMs.

Some of the more surprising announcements from the event focused on networking.

First was a discussion of AMD's next-generation Pensando networking chip and a network interface card they're calling the AMD Pensando Pollara 400 AI NIC, which the company claims is the industry's first shipping AI-powered network card. AMD is part of the Ultra Ethernet Consortium and, not surprisingly, the Pollara 400 uses the Ultra Ethernet standard. It reportedly offers 20% improvements in speed and 20x more capacity to scale than competitive cards using InfiniBand technology.

As with its GPUs, AMD also announced its next-generation networking chip, codenamed "Vulcano," designed for large AI clusters. It will offer 800 GB/sec network speeds and up to 8x the scale-out performance for large groups of GPUs when released in 2026.

AMD also touted the new open-source Ultra Accelerator Link (UAL) standard for GPU-to-GPU and other chip-to-chip connections. A direct answer to Nvidia's NVLink technology, UAL is based on AMD's Infinity Fabric and matches the performance of Nvidia's technology while providing more flexibility by enabling connections between any company's GPUs and CPUs.

Putting all of these various elements together, arguably the biggest hardware news – both literally and figuratively – from the Advancing AI event was AMD's new rack architecture designs.

Large cloud providers, neocloud operators, and even some sophisticated enterprises have been moving toward rack-based complete solutions for their AI infrastructure, so it was not surprising to see AMD make these announcements – particularly after acquiring expertise from ZT Systems, a company that designs rack computing systems, earlier this year.

Still, it was an important step to show a complete competitive offering with even more advanced capabilities against Nvidia's NVL72 and to demonstrate how all the pieces of AMD's silicon solutions can work together.

In addition to showing systems based on their current 2025 chip offerings, AMD also unveiled their Helios rack architecture, coming in 2026. It will leverage a complete suite of AMD chips, including next-generation Epyc CPUs (codenamed Venice), Instinct MI400 GPUs, and the Vulcano networking chip. What's important about Helios is that it demonstrates AMD will not only be on equal footing with next-generation Vera Rubin-based rack systems Nvidia has announced for next year, but may even surpass them.

In fact, AMD arguably took a page from the recent Nvidia playbook by offering a multi-year preview of its silicon and rack-architecture roadmaps, making it clear that they are not resting on their laurels but moving aggressively forward with critical technology developments.

Importantly, they did so while touting what they expect will be equivalent or better performance from these new options. (Of course, all of these are based on estimates of expected performance, which could – and likely will – change for both companies.) Regardless of what the final numbers prove to be, the bigger point is that AMD is clearly confident enough in its current and future product roadmaps to take on the toughest competition. That says a lot.

ROCm and software developments

As mentioned earlier, the key software story for AMD was the release of version 7 of its open-source ROCm software stack. The company highlighted multiple performance improvements on inferencing workloads, as well as increased day-zero compatibility with many of the most popular LLMs. They also discussed ongoing work with other critical AI software frameworks and development tools. There was a particular focus on enabling enterprises to use ROCm for their own in-house development efforts through ROCm Enterprise AI.

On their own, some of these changes are modest, but collectively they show clear software momentum that AMD has been building. Strategically, this is critical, because competition against Nvidia's CUDA software stack continues to be the biggest challenge AMD faces in convincing organizations to adopt its solutions. It will be interesting to see how AMD integrates some of its recent AI software-related acquisitions – including Lamini, Brium, and Untether AI – into its range of software offerings.

One of the more surprising bits of software news from AMD was the integration of ROCm support into Windows and the Windows ML AI software stack. This helps make Windows a more useful platform for AI developers and potentially opens up new opportunities to better leverage AMD GPUs and NPUs for on-device AI acceleration.

Speaking of developers, AMD also used the event to announce its AMD Developer Cloud for software designers, which gives them a free resource (at least initially, via free cloud credits) to access MI300-based infrastructure and build applications with ROCm-based software tools. Again, a small but critically important step in demonstrating how the company is working to expand its influence across the AI software development ecosystem.

Clearly, the collective actions the company is taking are starting to make an impact. AMD welcomed a broad range of customers leveraging its solutions in a big way, including OpenAI, Microsoft, Oracle Cloud, Humane, Meta, xAI, and many more.

They also talked about all their work in creating sovereign AI deployments in countries around the world. And ultimately, as the company started the keynote with, it's all about continuing to build trust among its customers, partners and potential new clients.

AMD has the benefit of being an extremely strong alternative to Nvidia – one that many in the market want to see increase its presence for competitive balance. Based on what was announced at Advancing AI, it looks like AMD is moving in the right direction.

Bob O'Donnell is the founder and chief analyst of TECHnalysis Research, LLC a technology consulting firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on X @bobodtech

English (US) ·

English (US) ·