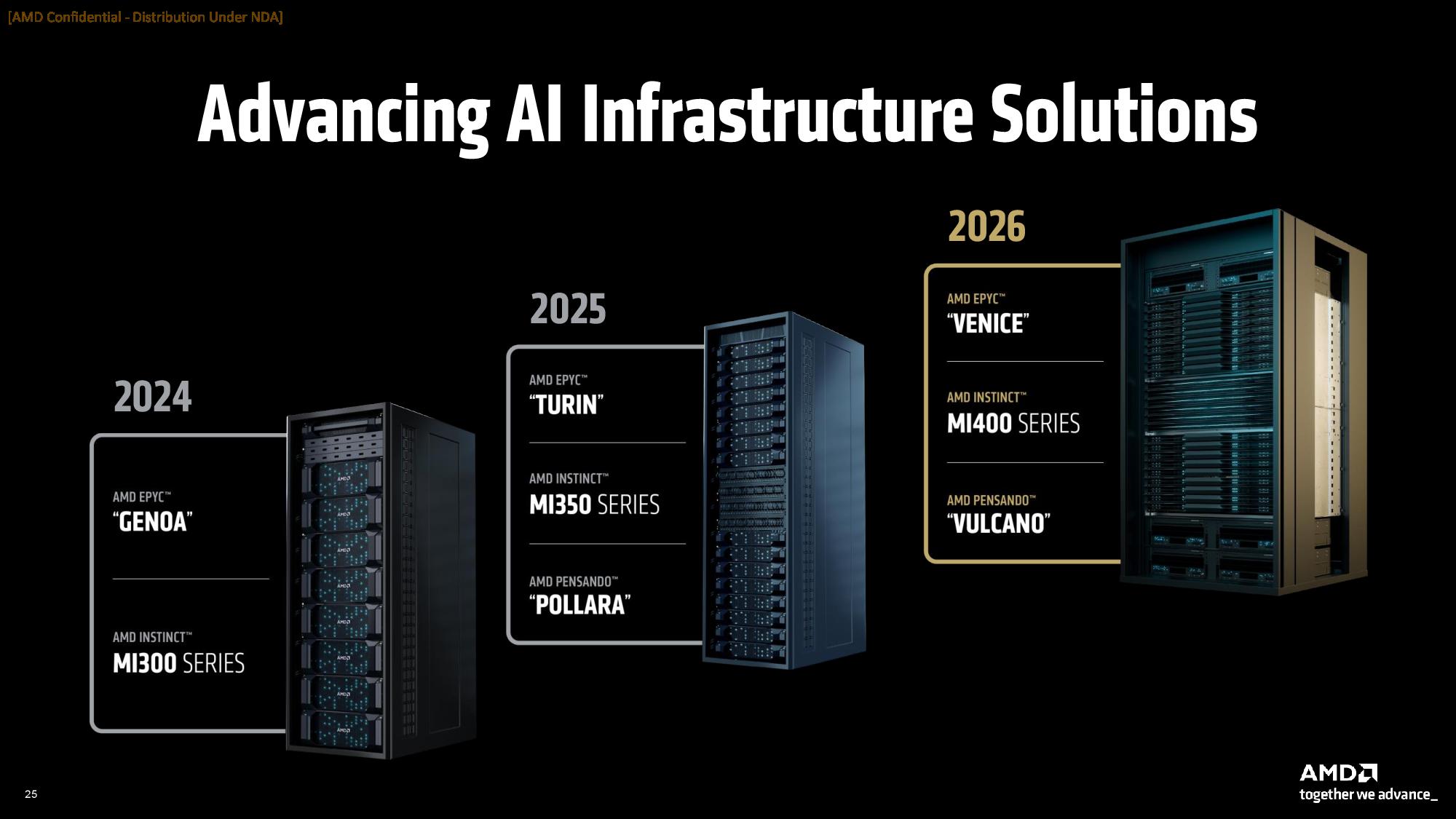

AMD gave a preview of its first in-house designed rack-scale solution called Helios at its Advancing AI event on Thursday. The system is set to be based on the company's next-generation EPYC 'Venice' processors, will use its Instinct MI400-series accelerator, and will rely on network connections featuring the upcoming Pensando network cards. Overall, the company says that the flagship MI400X is 10 times more powerful than the MI300X, which is a remarkable progress given that the MI400X will be released about three years after the MI300X.

When it comes to rack-scale solutions for AI, AMD clearly trails behind Nvidia. This is going to change a bit this year as cloud service providers (such as Oracle OCI), OEMs, and ODMs will build and deploy rack-scale solutions based on the Instinct MI350X-series GPUs, but those systems will not be designed by AMD, and they will have to interconnect each 8-way system using Ethernet, not low-latency high-bandwidth interconnects like NVLink.

Swipe to scroll horizontally

| Year | 2025 | 2026 | 2024 | 2025 | 2026 | 2027 |

| Density | 128 | 128 | NVL72 | NVL72 | NVL144 | NVL576 |

| GPU Architecture | CDNA 4 | CDNA 5 | Blackwell | Blackwell Ultra | Rubin | Rubin Ultra |

| GPU/GPU+CPU | MI355X | MI400X | GB200 | GB300 | VR200 | VR300 |

| Compute Chiplets | 256 | ? | 144 | 144 | 144 | 576 |

| GPU Packages | 128 | 128 | 72 | 72 | 72 | 144 |

| FP4 PFLOPs (Dense) | 1280 | 2560 | 720 | 1080 | 3600 | 14400 |

| HBM Capacity | 36 TB | 55 TB | 14 TB | 21 TB | 21 TB | 147 TB |

| HBM Bandwidth | 1024 TB/s | almost' 2560 TB/s | 576 TB/s | 576 TB/s | 936 TB/s | 4,608 TB/s |

| CPU | EPYC 'Turin' | EPYC 'Venice' | 72-core Grace | 72-core Grace | 88-core Vera | 88-core Vera |

| NVSWitch/UALink/IF | - | UALink/IF | NVSwitch 5.0 | NVSwitch 5.0 | NVSwitch 6.0 | NVSwitch 7.0 |

| NVSwitch Bandwidth | ? | ? | 3600 GB/s | 3600 GB/s | 7200 GB/s | 14400 GB/s |

| Scale-Out | ? | ? | 800G, copper | 800G, copper | 1600G, optics | 1600G, optics |

| Form-Factor Name | OEM/ODM proprietary | Helios | Oberon | Oberon | Oberon | Kyber |

The real change will occur next year with the first AMD-designed rack-scale system called Helios, which will use Zen 6-powered EPYC 'Venice' CPUs, CDNA 'Next'-based Instinct MI400-series GPUs, and Pensando 'Vulcano' network interface cards (NICs) that are rumored to increase the maximum scale-up world size to beyond eight GPUs, which will greatly enhance their capabilities for training and inference. The system will adhere to OCP standards and enable next-generation interconnects such as Ultra Ethernet and Ultra Accelerator Link, supporting demanding AI workloads.

"So let me introduce the Helios AI rack," said Andrew Dieckman, corporate VP and general manager of AMD's data center GPU business. "Helios is one of the system solutions that we are working on based on the Instinct MI400-series GPU, so it is a fully integrated AI rack with EPYC CPUs, Instinct MI400-series GPUs, Pensando NICs, and then our ROCm stack. It is a unified architecture designed for both frontier model training as well as massive scale inference [that] delivers leadership compute density, memory bandwidth, scale out interconnect, all built in an open OCP-compliant standard supporting Ultra Ethernet and UALink."

From a performance point of view, AMD's flagship Instinct MI400-series AI GPU (we will refer to it as to Instinct MI400X, though this is not the official name, and we will also call the CDNA Next as CDNA 5) doubles performance from the Instinct MI355X and increases memory capacity by 50% and bandwidth by more than 100%. While the MI355X delivers 10 dense FP4 PFLOPS, the MI400X is projected to hit 20 dense FP4 PFLOPS.

Overall, the company says that the flagship MI400X is 10 times more powerful than the MI300X, which is a remarkable progress given that the MI400X will be released about three years after the MI300X.

"When you look at our product roadmap and how we continue to accelerate, with MI355X, we have taken a major leap forward [compared to the MI300X]: we are delivering 3X the amount of performance on a broad set of models and workloads, and that is a significant uptick from the previous trajectory we were on from the MI300X with the MI325X," said Dieckman. "Now, with the Instinct MI400X and Helios, we bend that curve even further, and Helios is designed to deliver up to 10X more AI performance on the the most advanced frontier models in the high end."

Swipe to scroll horizontally

| Year | 2024 | 2025 | 2024 | 2025 | 2026 | 2027 |

| Architecture | CDNA 4 | CDNA 5 | Blackwell | Blackwell Ultra | Rubin | Rubin |

| GPU | MI355X | MI400X | B200 | B300 (Ultra) | VR200 | VR300 (Ultra) |

| Process Technology | N3P | ? | 4NP | 4NP | N3P (3NP?) | N3P (3NP?) |

| Physical Configuration | 2 x Reticle Sized GPU | ? | 2 x Reticle Sized GPUs | 2 x Reticle Sized GPUs | 2 x Reticle Sized GPUs, 2x I/O chiplets | 4 x Reticle Sized GPUs, 2x I/O chiplets |

| Packaging | CoWoS-S | ? | CoWoS-L | CoWoS-L | CoWoS-L | CoWoS-L |

| FP4 PFLOPs (per Package) | 10 | 20 | 10 | 15 | 50 | 100 |

| FP8/INT6 PFLOPs (per Package) | 5/- | 10/? | 4.5 | 10 | ? | ? |

| INT8 PFLOPS (per Package) | 5 | ? | 4.5 | 0.319 | ? | ? |

| BF16 PFLOPs (per Package) | 2.5 | ? | 2.25 | 5 | ? | ? |

| TF32 PFLOPs (per Package) | ? | ? | 1.12 | 2.5 | ? | ? |

| FP32 PFLOPs (per Package) | 153.7 | ? | 1.12 | 0.083 | ? | ? |

| FP64/FP64 Tensor TFLOPs (per Package) | 78.6 | ? | 40 | 1.39 | ? | ? |

| Memory | 288 GB HBM3E | 432 GB HBM4 | 192 GB HBM3E | 288 GB HBM3E | 288 GB HBM4 | 1 TB HBM4E |

| Memory Bandwidth | 8 TB/s | almost' 20 GB/s | 8 TB/s | 4 TB/s | 13 TB/s | 32 TB/s |

| HBM Stacks | 8 | 12 | 6 | 8 | 8 | 16 |

| NVLink/UALink | Infinity Fabric | UALink, Infinity Fabric | NVLink 5.0, 200 GT/s | NVLink 5.0, 200 GT/s | NVLink 6.0 | NVLink 7.0 |

| SerDes speed (Gb/s unidirectional) | ? | ? | 224G | 224G | 224G | 224G |

| GPU TDP | 1400 W | 1600 W (?) | 1200 W | 1400 W | 1800 W | 3600 W |

| CPU | 128-core EPYC 'Turin' | EPYC 'Venice' | 72-core Grace | 72-core Grace | 88-core Vera | 88-core Vera |

The new MI400X accelerator will also surpass Nvidia's Blackwell Ultra, which is currently ramping up. However, when it comes to comparison with Nvidia's next-generation Rubin R200 that delivers 50 dense FP4 PFLOPS, AMD's MI400X will be around 2.5 times slower. Still, AMD will have an ace up its sleeve, which is memory bandwidth and capacity (see tables for details). Similarly, Helios will outperform Nvidia's Blackwell Ultra-based NVL72 and Rubin-based NVL144.

However, it remains to be seen how Helios will stack against NVL144 in real-world applications. Also, it will be extremely hard to beat Nvidia's NVL576 both in terms of compute performance and memory bandwidth in 2027, though by that time, AMD will likely roll out something new.

At least, this is what AMD communicated at its Advancing AI event this week: the company plans to continue evolving its integrated AI platforms with next-generation GPUs, CPUs, and networking technology, extending its roadmap well into 2027 and beyond.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

6 months ago

17

6 months ago

17

English (US) ·

English (US) ·