OpenAI's GPT-5 AI model is significantly more capable than predecessors, but it's also dramatically — about 8.6 times — more power-hungry than the previous GPT 4 version, according to estimates based on tests conducted by the University of Rhode Island's AI lab, reports The Guardian. However, OpenAI does not officially disclose the energy use of its latest model, which is part of what raises concerns about its overall energy footprint. That said, the published findings are just an estimate — and that estimate relies on a lot of estimates.

The University of Rhode Island's AI lab estimates that GPT-5 averages just over 18 Wh per query, so putting all of ChatGPT's reported 2.5 billion requests a day through the model could see energy usage as high as 45 GWh.

A daily energy use of 45 GWh is enormous. A typical modern nuclear power plant produces between 1 and 1.6 GW of electricity per reactor per hour, so data centers running OpenAI's GPT-5 at 18 Wh per query could require the power equivalent of two to three nuclear power reactors, an amount that could be enough to power a small country.

The University based its report on its estimates that producing a medium-length, 1,000-token GPT-5 response can consume up to 40 watt-hours (Wh) of electricity, with an average just over 18.35 Wh, up from 2.12 Wh for GPT-4. This was higher than all other tested models, except for OpenAI's o3 (25.35 Wh) and Deepseek's R1 (20.90 Wh).

But it's important to point out that the lab's test methodology is far from ideal.

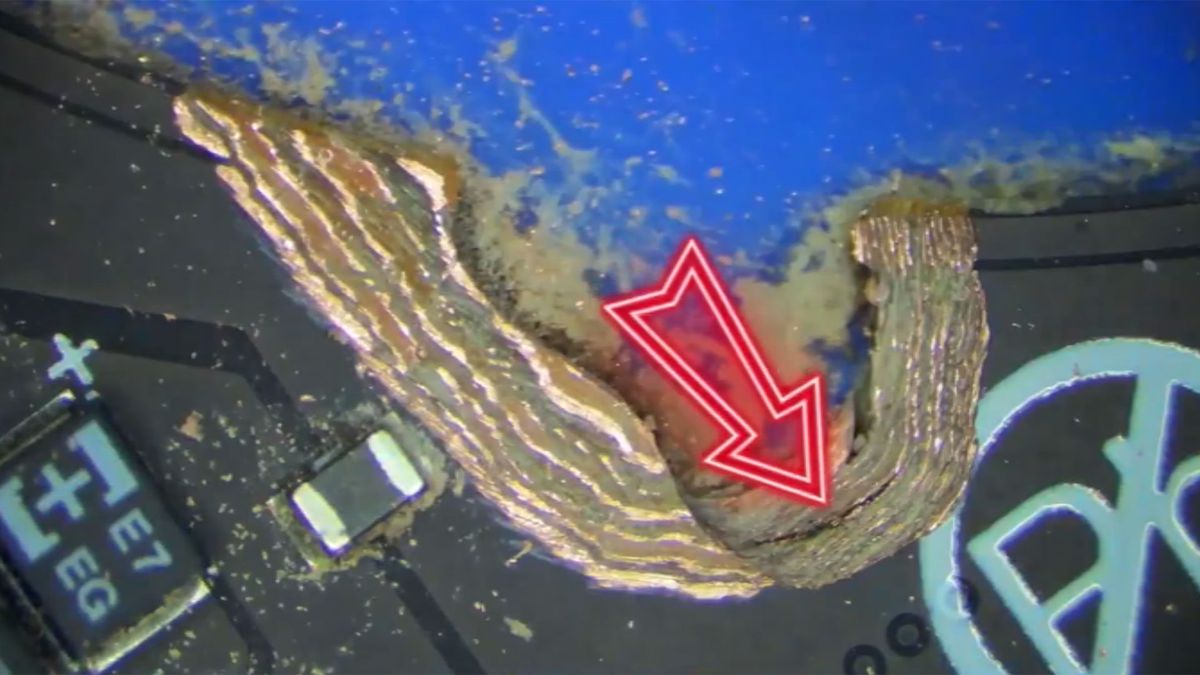

The team measured GPT-5’s power consumption by combining two key factors: how long the model took to respond to a given request, and the estimated average power draw of the hardware running it.

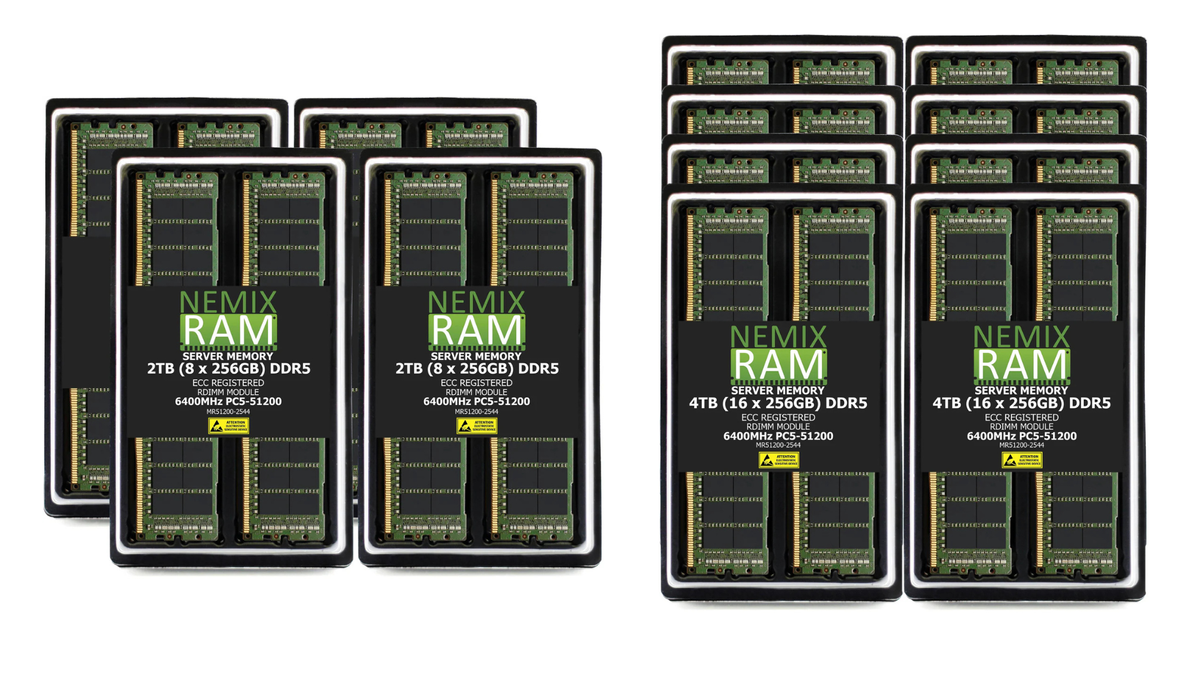

Since OpenAI has not revealed exact deployment details, the researchers had to guess at the hardware setup. They believe that OpenAI's latest AI model is likely deployed on Nvidia DGX H100 or DGX H200 systems hosted on Microsoft Azure. By multiplying the response time for a query by the hardware's estimated power draw, they arrived at watt-hour figures for different outputs, such as the 1,000-token benchmark they used for comparison.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The hardware estimation also accounted for non-GPU components (e.g., CPUs, memory, storage, cooling) and applied Azure-specific environmental multipliers such as Power Usage Effectiveness (PUE), Water Usage Effectiveness (WUE), and Carbon Intensity Factor (CIF). Of course, if Azure and OpenAI happen to use Nvidia's Blackwell hardware (which is up to four times faster), then the estimation is incorrect.

GPT-5 uses a mixture-of-experts design, so not all parameters are active for every request, which reduces power consumption for some short (or stupid) queries. But it does have a reasoning mode with extended processing time, which can raise power draw by five to 10 times for the same answer (i.e., beyond 40 Wh per query) — according to researchers like Shaolei Ren, cited by The Guardian.

While Rhode Island's AI labs' estimates can give us an idea of how the total power consumption of GPT 5 compares to previous-generation models, the absolute numbers may not be accurate at all. What we do know, though, is that AI data centers are leading to skyrocketing power bills in the U.S. already, and with the continued proliferation of the technology, all signs point to the power crunch becoming worse.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

4 months ago

12

4 months ago

12

English (US) ·

English (US) ·