Data centers cost an incredible amount of money to build, and require equally eye-watering amounts of electricity to power them. But while companies might talk up their green credentials through improved hardware efficiency or renewable energy, one area that's often overlooked in their design and construction is the astronomical quantities of water they consume. This year's enormous scale of datacenter and "AI factory" announcements doesn't just raise concerns about their electricity use and environmental impact, but also about the unprecedented amount of water they'll consume just to keep these facilities cool.

A single data center can consume upwards of five million gallons of water per day, or enough for a small town of 50,000 people, says the Environmental and Energy Study Institute. If you scale that problem up nationwide, the issue becomes even more exacerbated. In 2024, it was estimated that U.S. data centres consumed over 60 billion gallons of water through cooling; the figure provided by the Lawrence Berkley National Laboratory also excludes the quantities of water used for electricity generation or on-site needs outside of cooling.

Cooling is hard

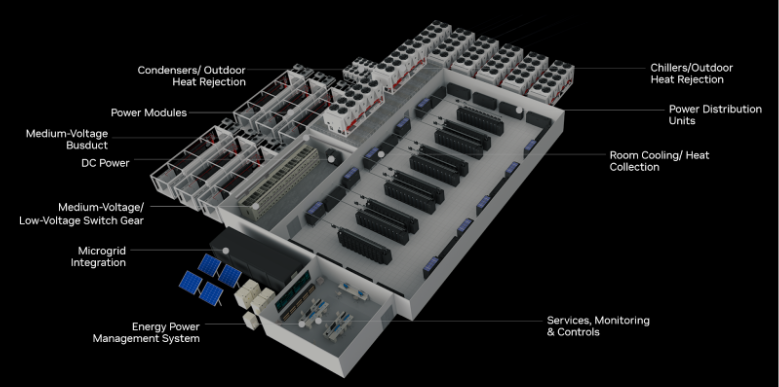

Cooling is crucial for data centers, as it affects performance and operational costs. Typically, this is handled in one of two ways: open-loop and closed-loop cooling. In an open loop, hot air from the data center is blown over water-moistened pads, or it's sprayed into the air in a fine mist. As the water evaporates, it reduces the temperature of the air, but that water is lost from the local water table completely, making it much more wasteful in its water use.

Closed-loop liquid cooling cools components directly via water blocks and loops, or by immersing the hardware in the cooling liquid. In either case, electric chillers are used to reduce the temperature of the water once it's been heated. This technique doesn't evaporate much, so water use is reduced, though electricity use and costs are typically far higher.

Of course, companies are also looking into exotic cooling methods, such as direct-to-chip and immersion cooling, which can also affect the amount of water being used in such cases.

Quantifying the issue

"Water use tends to receive much less attention than energy efficiency for sure," highlighted Masao Ashtine, senior energy transition manager at The Carbon Trust.

"The narrative often focuses on reducing electricity consumption and carbon emissions, which are easier to quantify and headline. However, evaporative cooling systems, commonly used to cut energy use, can significantly increase water demand. This trade-off is rarely highlighted, even though it has real implications for local water resources, especially in water-stressed regions."

The sheer lack of available water was one of the highlighted concerns in the UK recently when many of the world's top AI companies announced data center projects in the country. That came just weeks after the British government announced measures to address the "nationally significant" water shortfall, such as citizens taking shorter showers or deleting old emails.

Another issue is local water quality. In 2024, Maryland state and county inspectors documented several cases of "frac-out" events, in which drilling fluid and sediment were released into a tributary of the Monocacy River during underground drilling to lay fiber-optic cabling. Some of those fluids can have a disastrous effect on marine life, and the construction companies involved failed to effectively report the issue, as well as continuing to drill even after they were aware of the environmental impact.

"Most modern facilities are designed with strict discharge controls, but risks can arise from chemical treatments used in cooling systems or from improper wastewater management," explained Ashtine. "Localised impacts can definitely occur if safeguards aren’t properly enforced."

In evaporative cooling systems, wastewater byproducts often contain salts and impurities that must be cleaned via water treatment or discharged into the environment. This "blowdown" water can have an extremely high saline content and poses a real risk to local water sources. Due to concerns over this, some counties, like Fairfax, Virginia, now include the strict advisory that blowdown not be allowed in any circumstances due to the potential negative effect it would have on already stressed water sources.

Building a better (water) world

The solutions to data centers' water consumption issues are many, varied, and long-term. There is no turning off the tap to existing data centers, and there's no putting the AI genie back in the bottle. With hundreds of billions at stake and some of the world's largest governments and companies throwing their shoulder behind the AI arms race, these data centers are coming whether we like it or not.

But that doesn't mean there's nothing that can be done. Indeed, a lot can be done to make the problem less pronounced and to hold companies accountable for their water use and its effects on local environments and water sources.

It starts with accurate reporting. All the major tech companies have yearly sustainability reports, but some do it better than others. Google uses more water than most other companies combined, but we know this because it produces a yearly report on it. It also digs down into the water use at individual data centers, which gives us much greater insight than others.

Amazon, Meta, and Microsoft don't provide the same level of detail, though their latest reports suggest they're all looking to return more water to local sources than they consume by 2030, which shows they're at least aware of the issue.

These companies can also consider alternative cooling solutions in their facilities. The majority of UK data centers currently don't use water cooling at all. While that might be harder in hotter regions, it is doable. Google's Pflugerville, Texas, facility is air-cooled, rather than water-cooled, and uses barely any water compared to evaporative-cooled alternatives. It needs to be augmented with renewable energy to ensure there isn't greater water usage in power generation, but the potential is certainly there to better leverage natural cooling methods in data center construction.

"Some strategies that I have seen include recycling and reusing water within cooling systems, or even using non-potable water sources, such as reclaimed or rainwater," suggested Ashtine. "Locating facilities in water-abundant regions or near sustainable water sources is a no-brainer, too, but the logistic and economics will always trump in final decision making."

Inference with AI is effectively nonstop, but training isn't. If a company is going to spend millions of compute hours on a training project, could they run it overnight when it's cooler? Could they only use data centers with higher quantities of renewable power generation? What about a government mandate that only facilities with closed-loop cooling solutions can be used for AI training?

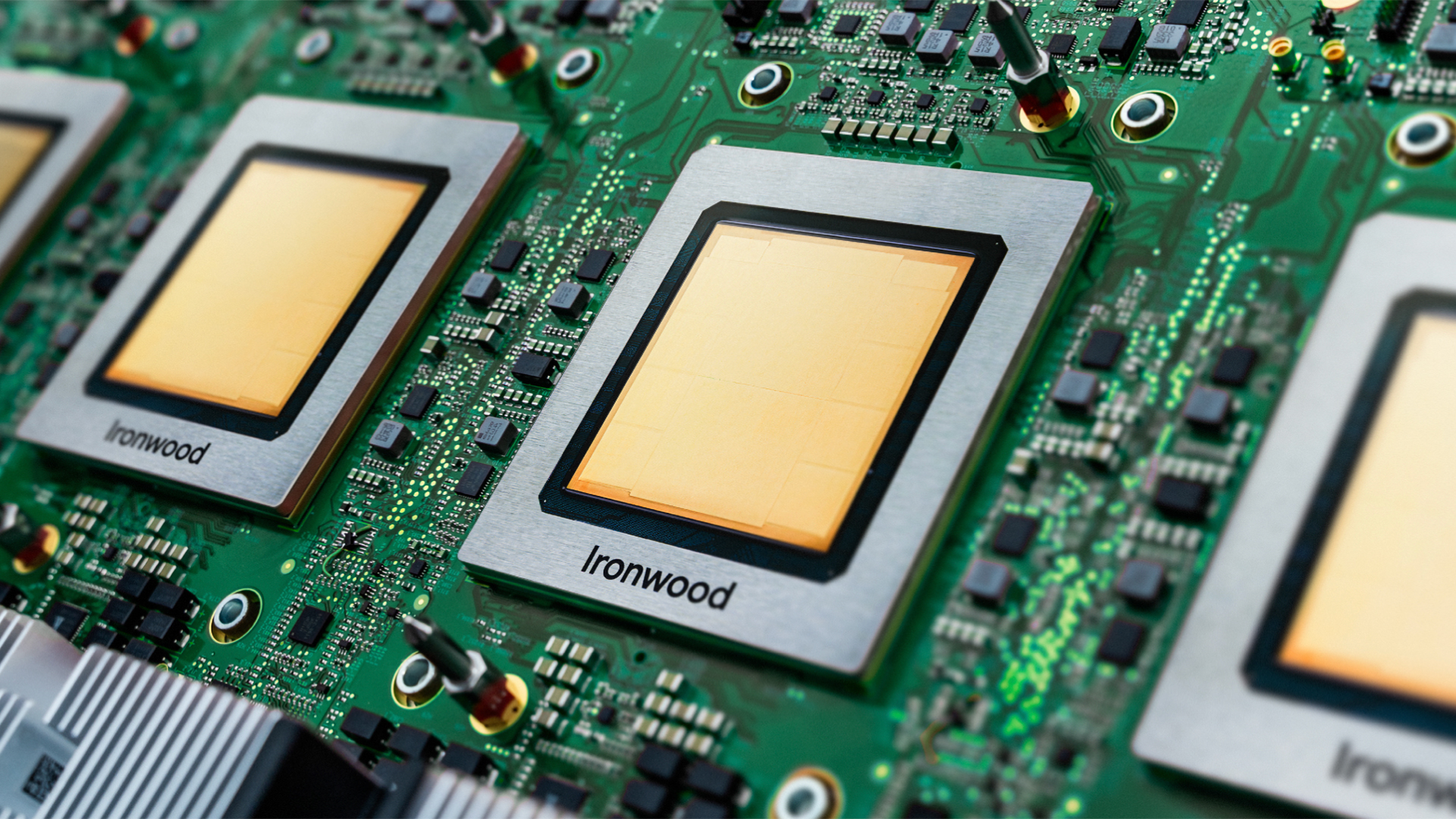

The hardware itself can be improved, too. Microsoft recently discussed microfluidic cooling for its chips, which could massively improve cooling efficiency and reduce overall hardware temperatures. The development of ASICs for AI will likely help, too, as general-purpose GPUs are incredibly power hungry. As we saw with cryptocurrency mining, we may see more bespoke and efficient chips developed, at least for AI inference workloads, which could reduce power and cooling demands at these facilities.

As for users? It's a drop in the proverbial and literal bucket, but every time you send an AI a prompt, it consumes water. There are conflicting reports on how much, from 50ml to 500ml per every 5-50 responses, but there's no denying that it has an impact. Using AI more judiciously could help curb water use.

The most important part, though, is just being more informed. Water usage at these facilities and indeed for our technology and its usage as a whole often takes a back seat to electricity demand. While that's likely warranted while greenhouse gas emissions are of greater concern than water shortages, that paradigm is likely to shift over the course of this century. Being mindful now might help make that problem far less pronounced in the future.

1 month ago

7

1 month ago

7

English (US) ·

English (US) ·