Every time a new tool comes along, people worry it will ruin us.

The printing press would corrupt faith, the calculator would kill math skills, and email was supposed to wreck productivity.

Now it’s AI’s turn. The pattern is familiar. It starts with a mix of hype, fear, and confusion.

Fractional CISO at DeepTempo and founder/CEO of BLodgic Inc.

Today, leaders and employees alike question whether AI is truly necessary or if it risks more than it rewards.

Fast on the heels of investment, skepticism often follows, although 76% of enterprises are exploring GenAI for innovation, only about 40% of consumers trust generative AI outputs, citing worries about accuracy and transparency.

The truth is, AI isn’t some brand-new alien force. It’s been part of our daily lives for decades.

Recommendation engines on Amazon, Netflix queues, voice assistants like Siri, even the Roomba you set loose in your living room.

These are all AI. The difference is that generative AI feels closer, touching how we write, code, and create. That proximity makes the stakes feel higher.

But this time A, is hitting a little different. And it's not all about automating tasks in the background. AI is colliding head on with knowledge workers and this is making people uneasy. Is “expertise” itself up for debate?

All gas, no brakes

Adoption of AI is doing anything but cooling off. In 2024 alone, U.S. private AI investment grew to $109.1 billion, attracting $33.9 billion globally in private investment, an 18.7% increase from 2023.

The underlying AI technologies have matured over decades, but the leap in LLMs capable of producing text, images, and code, has thrust the technology into the cultural spotlight like never before.

This acceleration has intensified the pace of change, forcing organizations to make adoption decisions under heightened public scrutiny. Boards are pushing for speed, while regulators and security teams are still trying to set the necessary ground rules.

What’s truly different this time is the perceived immediacy of impact. GenAI touches knowledge work directly, challenging the established norms around expertise, authorship, and control over intellectual output.

That’s creating friction between innovation advocates and those wary of eroding human judgment. The result is a polarizing debate, inconsistent adoption strategies, new security threat vectors and regulators pointing fingers like the spiderman meme.

Especially for sectors like law, finance, healthcare, and academia, the stakes couldn’t be higher. Trust and reputation are its currency. At the same time, resisting adoption entirely can mean falling behind competitors who are getting ahead of AI and making it work for them.

If adoption races ahead without safeguards, institutions risk losing credibility with customers, regulators, and their own people who might prefer to head to a competitor who’s getting it right.

On the flip side, dismissing AI altogether and sitting on the side of the pool is just as dangerous. our competitors have already jumped in the deep end with AI.

Fear vs. FOMO misses the point

The AI conversation is in need of moving beyond fear-based binaries. Like calculators in the classroom or email in the workplace, AI is not inherently good or bad. Put simply, it's a capability or co-intelligence.

The real differentiator is how leaders guide adoption and balance technical safeguards with cultural adaptation. The differentiator will be leadership and AI startups that are chipping away at the old guard, showing how to integrate AI deliberately with the right guardrails, culture, security and transparency.

For CISOs, CIOs, and other decision-makers, the goal should be to reject the false choice between total embrace and outright ban. Instead, organizations should be aiming for deliberate integration, run a PoC and be anchored in transparent governance.

Host lunch-and-learns to foster user education, and establish a clear alignment of organizational values and culture with AI. The human effect still matters. Especially now.

A teaching moment for all

When it comes to AI, getting clear on what “expertise” means should be a priority. Get a birdseye view of what's possible and what's already going on.

Take a fresh look at your workflows and figure out where AI can take the busywork off people’s plates, and where you still need human judgment in the driver’s seat.

Don’t be afraid to be explicit about where you need human judgment and where AI can safely take on repetitive work. There must be full visibility because when you leave it vague it can be up for widespread interpretations.

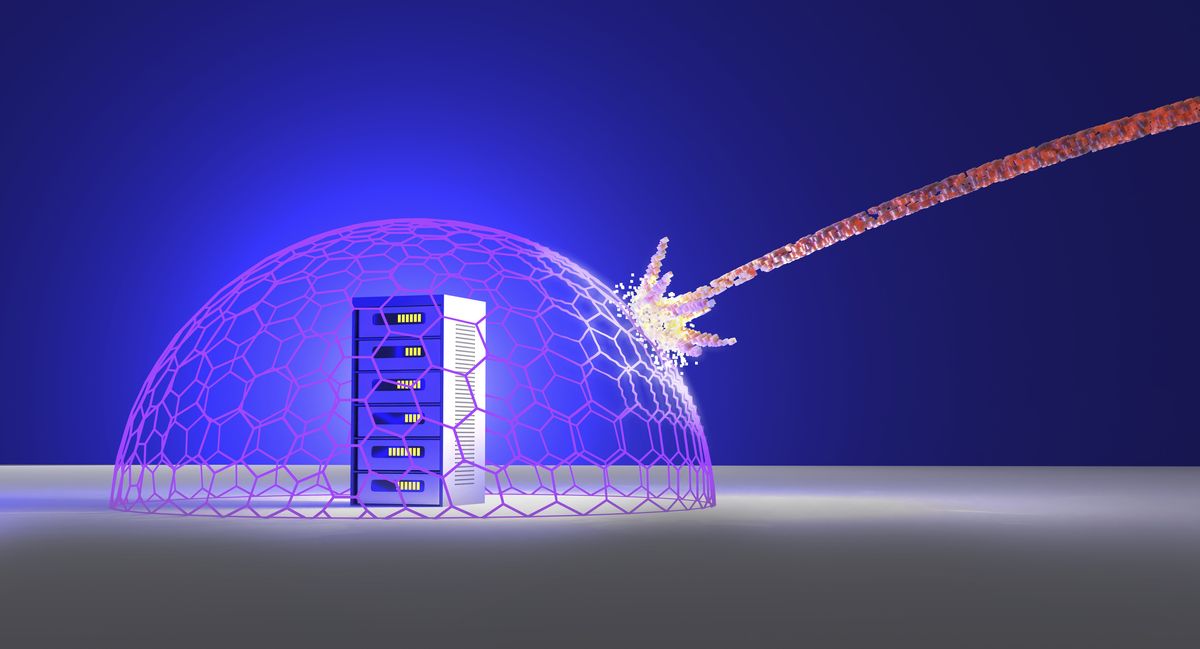

In cybersecurity, this clarity shows up every day. I have seen AI help filter through thousands of alerts that would otherwise bury a security team. The model does the grunt work and flags patterns that look suspicious. The human then steps in and decides if it is noise or a real attack.

When that line is clear the team stays sharp. When it is not clear the team either trusts the model too much or drowns in alerts. The stakes are high because one missed decision can be the difference between blocking an intrusion and reading about it later in the news.

By giving full, maybe even sometimes tedious explanations on how AI can be used, what data it can touch, how outputs will be validated, and how credit is assigned you’re off on the right foot.

When you make AI everyone’s business it becomes much easier to ensure that your employees have the rights and wrongs on lock, because AI know-how shouldn’t live in the IT department alone. If only IT understands the risks, you’re sunk.

Give people across the organization the tools and knowledge to use it responsibly, from spotting bias to knowing when to trust, or question its answers.

Start small but learn fast by testing AI in low-risk areas first, see what works, and tweak as you go. Keep an eye on how it’s performing with Machine Learning Operations (MLOps). Measure what matters. Let the business knowledge workers build the metrics and KPI’s. Make QA cool again.

The printing press, calculator… and now AI

When AI is done right it sharpens the edge. The printing press did not end learning. The calculator did not kill math. Email did not destroy productivity. Each one raised the floor and gave people space to work on what mattered more.

AI should be treated the same way. It is not here to erase trust or weaken systems. It is here to amplify what people do when paired with clear governance and accountability. Progress lasts when people see it makes life better without taking away integrity.

Trust is not earned by press releases. It is earned by proof. Show employees that AI has guardrails. Show customers that security and privacy come first. That is how credibility takes hold.

Credibility lasts when AI is transparent and accountable. That is how we defend trust in cybersecurity and that is how we shape the future.

We've featured the best encryption software.

This article was produced as part of TechRadarPro's Expert Insights channel where we feature the best and brightest minds in the technology industry today. The views expressed here are those of the author and are not necessarily those of TechRadarPro or Future plc. If you are interested in contributing find out more here: https://www.techradar.com/news/submit-your-story-to-techradar-pro

English (US) ·

English (US) ·