Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

What just happened? Working with international researchers, Meta has announced major milestones in understanding human intelligence through two groundbreaking studies: they have created AI models that can read and interpret brain signals to reconstruct typed sentences and map the precise neural processes that transform thoughts into spoken or written words.

The first of the studies, carried out by Meta's Fundamental Artificial Intelligence Research (FAIR) lab in Paris, collaborating with the Basque Center on Cognition, Brain and Language in San Sebastian, Spain, demonstrates the ability to decode the production of sentences from non-invasive brain recordings. Using magnetoencephalography (MEG) and electroencephalography (EEG), researchers recorded brain activity from 35 healthy volunteers as they typed sentences.

The system employs a three-part architecture consisting of an image encoder, a brain encoder, and an image decoder. The image encoder builds a rich set of representations of the image independently of the brain. The brain encoder then learns to align MEG signals to these image embeddings. Finally, the image decoder generates a plausible image based on these brain representations.

The results are impressive: the AI model can decode up to 80 percent of characters typed by participants whose brain activity was recorded with MEG, which is at least twice as effective as traditional EEG systems. This research opens up new possibilities for non-invasive brain-computer interfaces that could help restore communication for individuals who have lost the ability to speak.

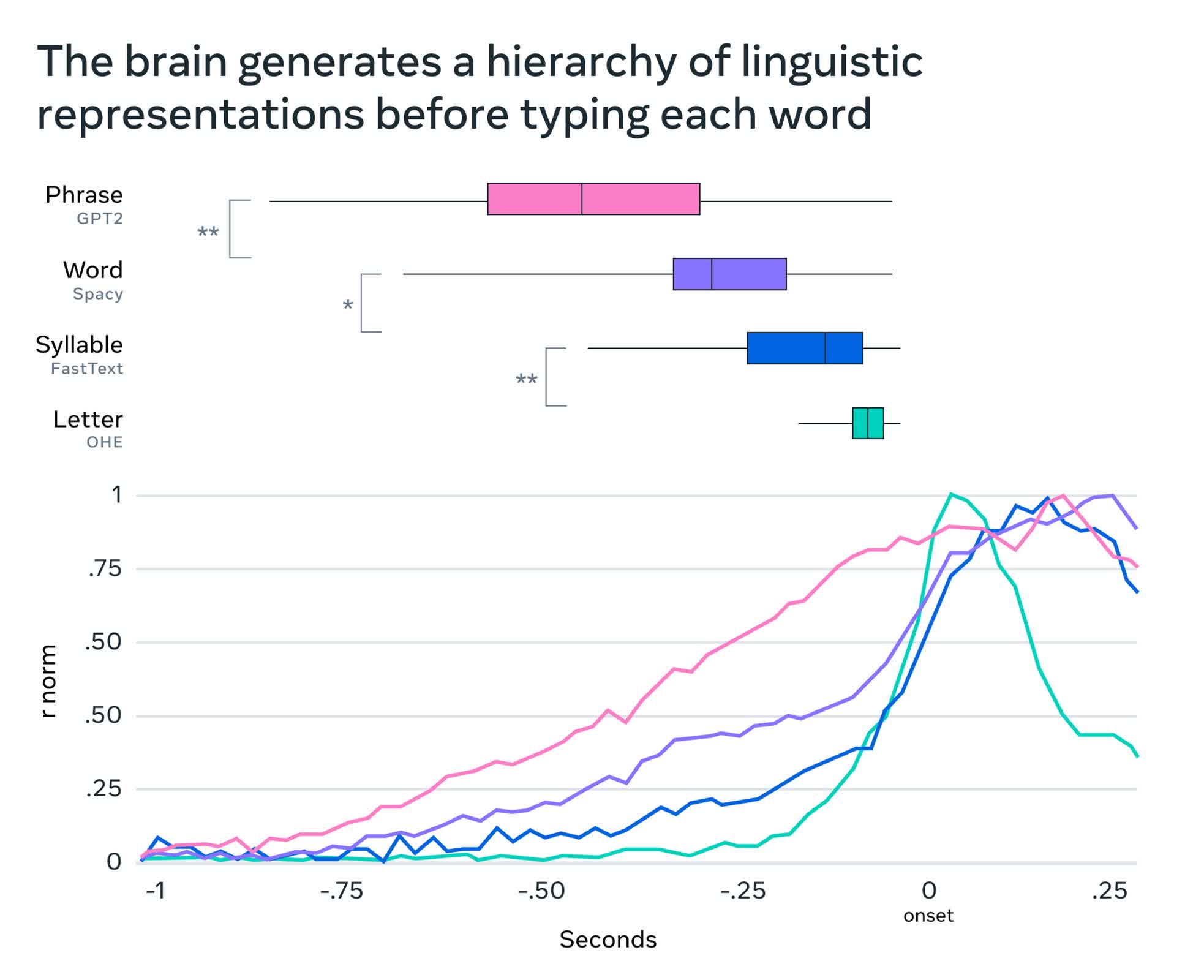

The second study focuses on understanding how the brain transforms thoughts into language. By using AI to interpret MEG signals while participants typed sentences, researchers were able to pinpoint the precise moments when thoughts are converted into words, syllables, and individual letters.

This research reveals that the brain generates a sequence of representations, starting from the most abstract level (the meaning of a sentence) and progressively transforming them into specific actions, such as finger movements on a keyboard. The study also demonstrates that the brain uses a 'dynamic neural code' to chain successive representations while maintaining each of them over extended periods.

While the technology shows promise, several challenges remain before it can be applied in clinical settings. Decoding performance is still imperfect, and MEG requires subjects to be in a magnetically shielded room and remain still. The MEG scanner itself is large, expensive, and needs to be operated in a shielded room, as the Earth's magnetic field is a trillion times stronger than the one in the brain.

Meta plans to address these limitations in future research by improving the accuracy and reliability of the decoding process, exploring alternative non-invasive brain imaging techniques that are more practical for everyday use, and developing more sophisticated AI models that can better interpret complex brain signals. The company also aims to expand its research to include a wider range of cognitive processes and explore potential applications in fields such as healthcare, education, and human-computer interaction.

While further research is needed before these developments can help people with brain injuries, they bring us closer to building AI systems that can learn and reason more like humans.

English (US) ·

English (US) ·