Serving tech enthusiasts for over 25 years.

TechSpot means tech analysis and advice you can trust.

In context: Some industry experts boldly claim that generative AI will soon replace human software developers. With tools like GitHub Copilot and AI-driven "vibe" coding startups, it may seem that AI has already significantly impacted software engineering. However, a new study suggests that AI still has a long way to go before replacing human programmers.

The Microsoft Research study acknowledges that while today's AI coding tools can boost productivity by suggesting examples, they are limited in actively seeking new information or interacting with code execution when these solutions fail. However, human developers routinely perform these tasks when debugging, highlighting a significant gap in AI's capabilities.

Microsoft introduced a new environment called debug-gym to explore and address these challenges. This platform allows AI models to debug real-world codebases using tools similar to those developers use, enabling the information-seeking behavior essential for effective debugging.

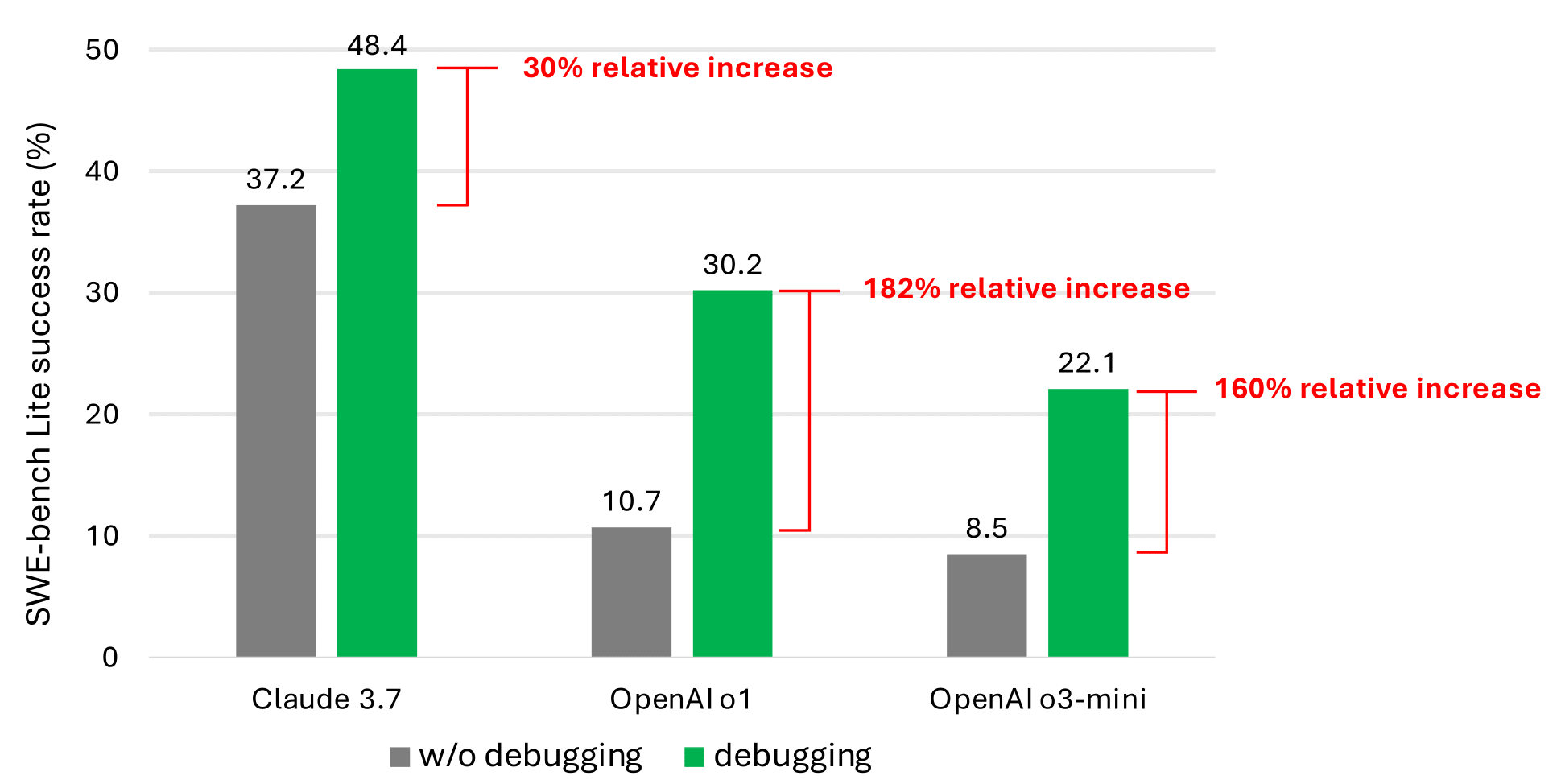

Microsoft tested how well a simple AI agent, built with existing language models, could debug real-world code using debug-gym. While the results were promising, they were still limited. Despite having access to interactive debugging tools, the prompt-based agents rarely solved more than half of the tasks in benchmarks. That's far from the level of competence needed to replace human engineers.

The research identifies two key issues at play. First, the training data for today's LLMs lacks sufficient examples of the decision-making behavior typical in real debugging sessions. Second, these models are not yet fully capable of utilizing debugging tools to their full potential.

"We believe this is due to the scarcity of data representing sequential decision-making behavior (e.g., debugging traces) in the current LLM training corpus," the researchers said.

Of course, artificial intelligence is advancing rapidly. Microsoft believes that language models can become much more capable debuggers with the right focused training approaches over time. One approach the researchers suggest is creating specialized training data focused on debugging processes and trajectories. For example, they propose developing an "info-seeking" model that gathers relevant debugging context and passes it on to a larger code generation model.

The broader findings align with previous studies, showing that while artificial intelligence can occasionally generate seemingly functional applications for specific tasks, the resulting code often contains bugs and security vulnerabilities. Until artificial intelligence can handle this core function of software development, it will remain an assistant – not a replacement.

English (US) ·

English (US) ·